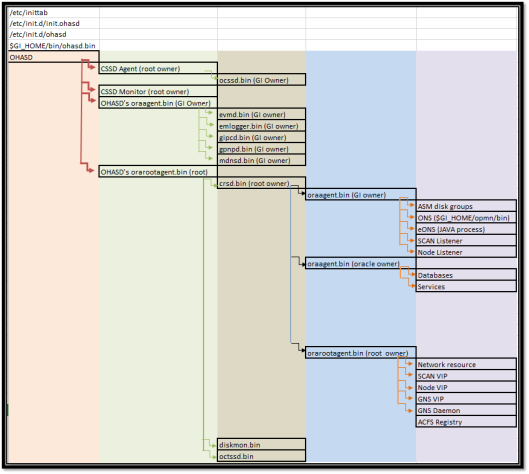

My pictorial version

In a cluster, OHASD runs as “root”, while on Oracle Restart Environments, it runs as “oracle”.

Administrators can issue cluster wide commands using OHASD.

OHASD will start even if GI is explicitly disabled.

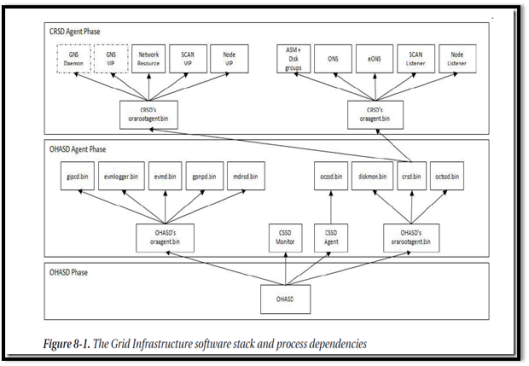

In 11gR2 and later, there are 2 new types of agent processes: Oracle Agent and Oracle Root Agent.

There are 2 sets of Oracle Agents and Oracle Root Agents, one for HAS stack and the other for CRS stack.

The Oracle Agent and Oracle Root Agent belonging to HAS stack are started byohasd daemon and Oracle Agent is owned by GI software owner which is responsible to start resources that do not require “root” privilege.

The Oracle Agent and Oracle Root Agent belonging to CRS stack are started bycrsd daemon.

OHAS starts 2 “Oracle Root Agents” –

First is spawned by OHAS. It initializes resources that need elevated OS privileges.

CSSD and CRSD daemons are mainly created.

– CRSD starts the next root agent and it requires elevated privileges which are mainly network related resources.

OHAS also starts 2 “Oracle Agents”.

– One starts resources for accessing OCR and Voting disk files. It is created by the OHAS daemon.

– Another is created by CRS daemon (CRSD). It starts all resources that do not require root access.

It runs with GI owner’s privileges. Its tasks was earlier performed by “racg” until 11.1 OPROCD process was replaced by “cssdagent” process from 11.2 GI. It was started with release 11.2.0.4 patchset for I/O fencing. In earlier releases, it was handled by “kernel hangcheck-timer module”.

EVMD publishes Oracle Clusterware events to all nodes of the cluster like, ability to start and stio nodes, instances and services.

Event Manager Logger (EVMLOGGER) daemon will be started by evmd and it subscribes to a list of events read from a configuration file, and it runs user-defined actions when those events occur. This daemon is intended for backward compatibility.

ONS,eONS is a publish-and-subscribe service meant for communicating FAN events to interested clients in the environment.

CTSS is Cluster Time Synchronization Service. It is an alternative to NTP server. It runs as an OBSERVER when NTP is available, or, as ACTIVE if NTP is not available, in which case the first node to start in the cluster becomes the master clock reference and all nodes joining later, will become slaves.

CSSD has 3 separate processes:

1. CSS Daemon (ocssd),

2. CSS Agent (cssdagent), &

3. CSS Monitor (cssdmonitor).

CSSDAGENT is responsible for spawning CSSD and is created by OHASD.

CSSDMONITOR monitors CSSD and overall node health is also spawned by OHAS.

ASM disk headers have metadata from 11.2 to allow CSSD start with the voting disks in ASM. “kfed” can be used to read teh header of an ASM disk.

“kfdhdb.vfstart” and “kfdhdb.vfend” fields tell CSS where to find the voting disks. This does not require the ASM to be up.Once the voting disks are identified, CSS can access and join the cluster.

Important daemons:

| S.No | Component |

11.1 Clusterware

|

11.2 Grid Infrastructure

|

||

| Linux Process | Comment | Linux Process | Comment | ||

| 1 | CRS | crsd.bin | Runs as root | crsd.bin | Runs as root |

| 2 | CSS | init.cssd, ocssd and ocssd.bin | Except for ocssd.bin, rest 2 run as root | ocssd.bin, cssdmonitor and cssdagent | |

| 3 | EVM | evmd, evmd.bin and evmlogger | Evmd runs as root | evmd.bin and evmlogger.bin | |

| 4 | ONS | ons | |||

| 5 | ONS/eONS | ons/eons | ONS is Oracle Notification Service. eONS is a Java Process. | ||

| 6 | OPROCD | oprocd | Runs as root and provides node fencing instead of hangcheck timer kernel module | ||

| 7 | RACG | racgmain and racgimon | |||

| 8 | CTSS | octssd.bin | Runs as root | ||

| 9 | Oracle Agent | oraagent.bin | |||

| 10 | Oracle Root Agent | orarootagent | Runs as root | ||

| 11 | Oracle High Availability Service | ohasd.bin | Runs as root through init. | ||

CRSD is run as “root” and restarts automatically, if it fails.

OCR resource configuration includes definitions of dependencies on other cluster resources, timeouts, retries, assignment and failover policies.

IN RAC, CRS is responsible for monitoring DB instances, listeners and services, and restarting them in case of a failure.

In a single instance Oracle Restart environment, application resources are managed by “ohasd” and not by “crsd”.

If “cssdagent” discovers “ocssd” has stopped, then it shuts down the node to guarantee data integrity.

“diskmon” daemon provides I/O fencing for Exadata storage.

=========================================

“Oracle Agent” from the HA stack starts the below:

1. EVMD and EVMLOGGER

2. “gipcd”

3. “gpnpd”

4. “mDNSd”

“Oracle Root Agent” spawned by HA stack, starts all daemons that require “root” privilege. Below are started.

1. crsd

2. ctssd

3. diskmon daemon.

4. ACFS daemon.

Once CRS is started, it will create another Oracle Agent and Oracle Root Agent.

If GI is owned by “grid” account, a second Oracle Agent is created and will be responsible for

– Starting and monitoring local ASM instance.

– ONS and eONS daemons.

– SCAN listener.

– Node Listener.

If GI is owned by the same owner as the RDBMS binaries, then the “oracle” Oracle agent will perform the tasks listed previously by the “grid” Oracle Agent.

“oracle” root agent will create the following background processes:

1. GNS, if available.

2. GNS VIP, if enabled.

3. ACFS registry.

4. Network.

5. SCAN VIP

6. Node VIP.

The functionality provided by the Oracle Agent Process in 11gR2 was provided by racgmain and racgimon background processes in earlier releases.

as provided by racgmain and racgimon background processes in earlier releases.

Where is the Voting disk located?

With Oracle 11gR2 the voting disk can be located in Automatic Storage Management devices.

To display the devices which are used for the voting disk execute the following command:

$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

— —– —————– ——— ———

1. ONLINE 0aa25b63b2434f80bf5cf601b0691ef7 (/dev/sdh) [CLUSTERDATA]

2. ONLINE b0f893e773f04f3fbfb89f66a9c86f67 (/dev/sdf) [CLUSTERDATA]

3. ONLINE 961b89bdd7024fc7bf82c5e5902a91bb (/dev/sdk) [CLUSTERDATA]

Located 3 voting disk(s).

It is possible to do the same, using an asm query, as you can see below the votingdisk is located in the clusterdata diskgroup.

SQL> select group_number,name,voting_files from v$asm_diskgroup;

GROUP_NUMBER NAME V

———— —————————— –

1 CLUSTERDATA Y

2 DATA N

3 SOFTWARE N

When we use asmcmd to look for the voting files, we don’t see them in the clusterdata diskgroup. So where is it located?

Using kfed and the filename displayed from the output of crctl query css votedisk, we can see the starting and end point of the voting disk.

SQL> !kfed read /dev/sdh | more

…

kfdhdb.grptyp: 2 ; 0x026: KFDGTP_NORMAL

kfdhdb.hdrsts: 3 ; 0x027: KFDHDR_MEMBER

kfdhdb.dskname: CLUSTERDATA_0000 ; 0x028: length=16

kfdhdb.grpname: CLUSTERDATA ; 0x048: length=11

kfdhdb.fgname: CLUSTERDATA_0000 ; 0x068: length=16

…

kfdhdb.grpstmp.lo: 815948800 ; 0x0e8: USEC=0x0 MSEC=0x99 SECS=0xa MINS=0xc

kfdhdb.vfstart: 96 ; 0x0ec: 0x00000060 <=== starting point

kfdhdb.vfend: 128 ; 0x0f0: 0x00000080 <=== end point

kfdhdb.spfile: 0 ; 0x0f4: 0x00000000

So on the device starting at block 96 until 127 we can find one of the voting disks.

using DD command we can dump the blocks, but as we don’t have dd default on windows we can also query one of the internal X$ tables.

example to use dd: dd if=/dev/sdh bs=1M skip=95 count=32 | od -c

We use the previous displayed diskgroup number and als the result of the kfed vfstart and vfend.

select GROUP_KFDAT,NUMBER_KFDAT,AUNUM_KFDAT,FNUM_KFDAT from x$kfdat where group_kfdat=1 and aunum_kfdat between 96 and 127

This will display allocation unit 96 untill 127, where the group_number is 1, beside the allocation unit it will display the disk and internal file number.

SQL> select GROUP_KFDAT,NUMBER_KFDAT,AUNUM_KFDAT,FNUM_KFDAT from x$kfdat where group_kfdat=1 and aunum_kfdat between 96 and 127;

GROUP_KFDAT NUMBER_KFDAT AUNUM_KFDAT FNUM_KFDAT

———– ———— ———– ———-

1 0 96 1048572

1 0 97 1048572

1 0 98 1048572

1 0 99 1048572

1 0 100 1048572

1 0 101 1048572

……

1 0 116 1048572

1 0 117 1048572

GROUP_KFDAT NUMBER_KFDAT AUNUM_KFDAT FNUM_KFDAT

———– ———— ———– ———-

1 0 118 1048572

1 0 119 1048572

1 0 120 1048572

1 0 121 1048572

1 0 122 1048572

1 0 123 1048572

1 0 124 1048572

1 0 125 1048572

1 0 126 1048572

1 0 127 104857